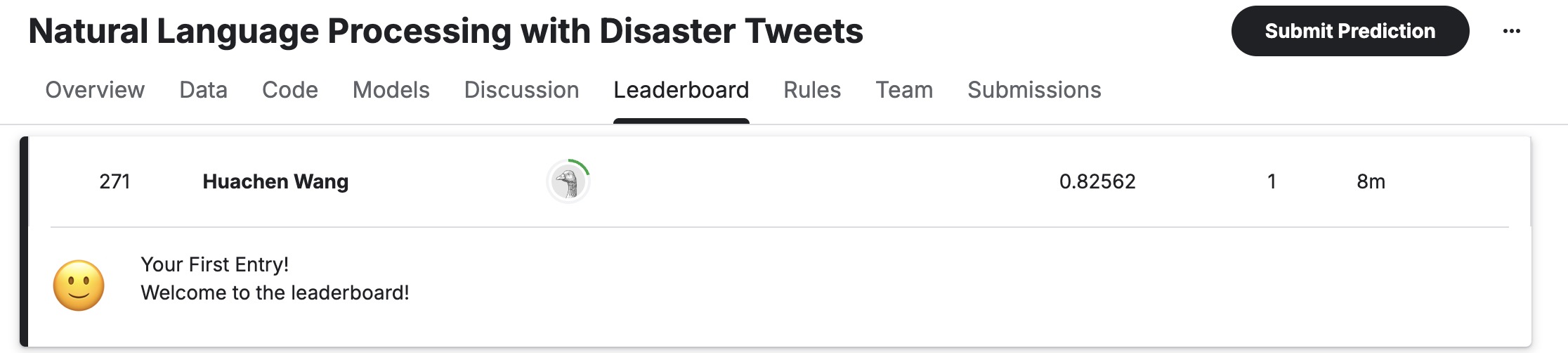

In June 2025, I participated in my very first NLP competition on Kaggle — the classic Disaster Tweets Classification challenge. I entered with the mindset of simply trying to run BERT once and learn the ropes. What I didn’t expect was that just a few days later, I’d proudly see my name appear on the Kaggle public leaderboard, ranked #271 globally with an F1 score of 0.82562.

This blog is a reflection of that experience: the things that went wrong, the breakthroughs that followed, and what I learned along the way. For any beginner wondering if they can break into NLP — I’m here to say: you absolutely can.

🧠 Why This Project?

Coming from a backend engineering background, I had always admired AI from afar — it looked powerful but intimidating. When I came across this competition, it felt like the perfect entry point: the task was to classify whether a tweet was reporting a real disaster. The dataset was small, messy, and human — perfect for testing how much value a language model could extract from noisy text.

What made it exciting wasn’t just the model performance, but the idea that I could use language understanding as a tool. That made it personal.

💻 Running on Apple M2 Pro

Yes, I trained BERT locally on a MacBook with M2 Pro.

import torch

device = torch.device("mps" if torch.backends.mps.is_available() else "cpu")

model.to(device)Tips for M2 Pro (MPS backend):

Stick to small batch_size (e.g., 4), or risk OOM.

Don’t use fp16; MPS doesn’t fully support it.

Training speed is slower than CUDA, but good enough for small datasets.

Hugging Face’s Trainer API worked smoothly with MPS once setup was correct.

⚠️ First Mistakes I Made

Eval dataset had no labels → KeyError: ‘eval_f1’

I mistakenly used the test set (with no labels) as the validation set. Lesson learned: always double-check your dataset fields.

Raw tweets confuse models

Initial results were terrible. Accuracy looked okay, but precision/recall were lopsided. Cleaning text — like removing URLs, mentions, and symbols — improved F1 dramatically.

Thinking more code = better results

I almost got lost adding complexity, until I realized: clean input, a proper train/val split, and a solid pre-trained model were already more than enough.

✅ My Training Configuration

from transformers import TrainingArguments

training_args = TrainingArguments(

output_dir="./results",

evaluation_strategy="epoch",

save_strategy="epoch",

per_device_train_batch_size=4,

per_device_eval_batch_size=8,

num_train_epochs=3,

logging_dir="./logs",

logging_steps=10,

load_best_model_at_end=True,

metric_for_best_model="f1",

greater_is_better=True

)For metrics:

from sklearn.metrics import accuracy_score, f1_score, precision_score, recall_score

def compute_metrics(eval_pred):

logits, labels = eval_pred

preds = np.argmax(logits, axis=1)

return {

"accuracy": accuracy_score(labels, preds),

"f1": f1_score(labels, preds, average="binary"),

"precision": precision_score(labels, preds, average="binary"),

"recall": recall_score(labels, preds, average="binary")

}🎯 When It All Came Together

After a few iterations, I got the following results on the validation set:

accuracy = 0.826

f1 = 0.792And then I submitted the predictions to Kaggle. When I refreshed the page —

Public Leaderboard: F1 = 0.82562, Rank = #271 globally

For my first-ever submission, on a local machine, that moment was absolutely unforgettable.

📤 Submission Pipeline (Summarized)

# Run prediction

predictions = trainer.predict(tokenized_test)

pred_labels = np.argmax(predictions.predictions, axis=1)

# Create submission file

submission = pd.DataFrame({

"id": test_df["id"],

"target": pred_labels

})

submission.to_csv("submission.csv", index=False)💡 What I Learned

Clean data > Complex modeling.

Trainer API is a lifesaver for beginners.

MPS (Mac) works — not perfect, but usable.

Leaderboard isn’t magic — it rewards careful thinking.

Confidence grows when you build.

🏅 Final Milestone

🎖️ Milestone Achieved:

Public F1 Score: 0.82562

Global Rank: #271

First time ever on the Kaggle Leaderboard! ✅

❤️ Closing Thoughts

As someone coming from backend development and transitioning into AI, this small victory meant the world to me. It wasn’t just about the rank — it was the first time I saw an idea, a model, and a submission come together to solve a real-world NLP task.

And that’s the magic of learning by doing.